Contents

Why quote WikiNames?

Wiki names can use any charset and any character. When saving a wiki page to the file system, we must use a safe charset the file system supports. The safest solution is to "asciify" the name, so it can be saved on any file system.

In moin up to and including moin 1.2.x, moin used to convert non-ascii characters in names to _xx where xx is config.charset encoding of the character. This format used at least 6 characters for each non-ascii character in the wiki name, limiting the longest wiki name to 44 characters. This situation is very problematic for Hebrew and even worse for asian languages.

Since "_" should be freed for wiki_names like this, the format changed to (xx) in early 1.3 development versions. This format is even worse, since each (hebrew) character uses 8! characters: (xx)(xx). This makes the longest wiki name only 31 (255/8) characters.

Testing 1.3 code shows that the practical longest name for Hebrew is only 30 characters. A name with 31 characters causes IOError: [Errno 63] File name too long.

This 30 characters name is converted to 240 characters filename, and with the time stamp in the backup directory it is a 251 characters name.

Mac OS X notes

On Mac OS X, the file system is using utf8 - with only one illegal character: ":". Maybe we should add a configuration option to avoid filename quoting. This can be much better solution to the wiki administrator, specially if there are many wiki pages in non-western languages. The downside is less portability for the wiki data. If we allow this, we might need to create a converter for such wikis. This can be a big problem, since very long file name can not be converted.

Proposal

To save some space while freeing the "_" character, the format will be (xx...) with whole sequences of non-ascii characters quoted and enclosed inside braces.

Examples

WikiName |

File Name |

WikiName |

WikiName |

free link |

free(20)link |

underscore_link |

underscore(5f)link |

Page/SubPage |

Page(2f)SubPage |

\u05e0\u05d9\u05e8 |

(d7a0d799d7a8) |

Limits

For Hebrew:

- The worst case is theoretical name with one word of 60 Hebrew characters.

- Typical names using words and spaces can be about 66 characters long (about 11 words of 5 characters).

- Names with some ASCII words can be even longer.

Languages which use more than 2 bytes per character in utf8 will be limited to shorter names.

Code

Here is the new code for quoting, please test it and add more test cases in different languages, so we can make sure it's really working for everyone - before it is merged into moin.

The code includes functions for quoting, unquoting and converting from both pre 1.3 names and the current 1.3 names and tests for each fuction.

Unquoting function check for invalid file names. It should not happen in normal use, but if it does - it will create a big mess if we don't catch this. Including versions using .find(), regx, and valid only regex \([a-fA-F0-9]+\) and timing tests.

These are the timings for 100 iterations on mix of 10 names (G5 2G, Mac OS X 10.3.3, python 2.3):

Time unquoteWikiname using find: ... 0.0573s ok Time unquoteWikiname using regex: ... 0.0635s ok Time unquoteWikiname using find safely: ... 0.0571s ok Time unquoteWikiname using regex safely: ... 0.0641s ok Time unquoteWikiname using valid only regex: ... 0.0622s ok

It seems all preform similarly, so the safer code is better (I predicted before, but it was interesting to test).

I opened a branch moin--quote--1.0 on my public read only archive. I hope you can register it using http. http://nirs.dyndns.org/nirs@freeshell.org--2004/

You can get the archive by:

% tla register-archive nirs@freeshell.org--2004 \

http://nirs.dyndns.org/nirs@freeshell.org--2004

% tla get http://nirs.dyndns.org/nirs@freeshell.org--2004/moin--quote--1.0

Progress

Current code review

I searched the current code to find code that trying to work on quoted file names:

- 1 function in wikiutil does work on quoted names and should be fixed

- 1 function in wikiutil fixed.

- other 27 places in various files call quoteWikinameFS in a safe way, and join the result to a path etc. - seems ok.

No code is looking for _2f or (2f) directly

Test Wiki

Here is a test wiki using the new quoting scheme: http://nirs.dyndns.org/quote . The new code did not make new problems.

Example of a long page name in this wiki (63 characters, 52 Hebrew characters, 11 spaces)

File name too long errors

There is no error checking for too long filenames or exceptions. This happens also on 1.1 and 1.2 when trying to use very long names. It seems that we get errors even when the name is shorter than the maximum theoretical length, when os.mkdir() is called.

- We should display an error message when this happens, and supply a solution. For example, when renaming a file, we should show an error message and let the user enter a new name or cancel the operation.

Converting old file names

- We need a converter for both pre 1.3 file name and current 1.3 file names. We can simplify the admin work by doing doing both conversions at the same time.

More testing

- When the test module will be fixed, add all the test to test_wikiutil.py

- The current test use only utf8 encoding. Add more tests for other encodings.

Encoding Problems

The current code use config.charset for file name encoding - what will happen if a Unicode name could not be encoded to non Unicode encoding like iso-8859-1?

- if config.charset is iso-8859-1 (for example) you won't have any page names not in that encoding

But what if someone installed the wiki, then decided to move to iso-8859-1? All the existing utf8 encoded and quoted names will not be readble - because the system will try to decoded them from config.charset, instead of 'utf8'.

- if someone wants to do that, he has to convert the data, of course

If you want to change config.charset - you must convert the existing file names in the wiki, and possibly the log files, from utf8 to another encoding - and this might be impossible.

sure, this is a theoretical problem - but not a practical one.

Something so important as the storage implementation should not be left to the admin. If we use only utf8 or utf16 for file names - it will work for everyone, because you can recode everything into utf8 and back. -- NirSoffer 2004-06-05 11:47:54

- there is no point of enforcing utf8 ONLY for filenames and leaving file content in iso8859-1

- moin 1.3 will default to hexified utf-8 for filenames and also for utf-8 for content

- but if you switch config.charset to iso8859-1 (for whatever reason), it will use it both for hexified filenames AND content. If that doesn't work for you, it simply was a bad decision. As the docs state (see CHANGES) we won't support that anyway, so that is not our problem.

We should move to only Unicode file name encoding - any other option is dangerous. The filename encoding does not have to be the same encoding for the wiki text.

- config.charset IS utf-8 by default. And if somebody chooses not to do utf-8, we shouldn't force it on him.

- pages with utf-8 names that can't be referenced (because of content is iso8859-1 only) are pointless.

Existing links will break

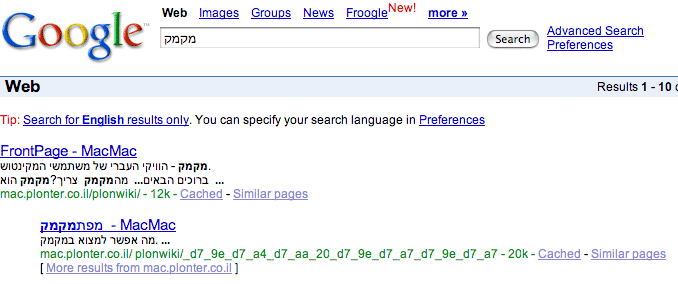

Current links in various sites and search engines that use pre 1.3 quoting structure - will break. Here is MacMac on the top of google search:

This link will break when converting to 1.3.

http://mac.plonter.co.il/plonwiki/_e2_8c_98 will break when the wiki will be converted to 1.3

Links created by 1.2 wikis, like this wiki: http://moinmoin.wikiwikiweb.de/MoinMoinBugs_2fCanCreateBadNames - will also break

1.3 links like this http://localhost/quote/Nir%20Soffer/SubPage will not break

We can prevent these broken links by unquoting URLs using pre1.3 way. I don't know if we should do it, since search engines will index the new pages quite fast.